In this blog post I go into the details of how to add an NVME SSD to an old-ish Intel NUC by replacing the built-in WiFi card that sits in the mini PCI-Express port. The goal was to increase the IOPS performance of my media server from ~50 writes/sec to ~15000 writes/sec and make the whole system more responsive.

Background

In the distant past, my media server was run on a Raspberry pi 3 b+, with a 2TB drive (Seagate 2TB Black STEA2000400) connected over USB 2.0. Over time, the data rates proved insufficient so I decided to buy a low-budget (~100GBP) Intel NUC NUC6CAYH. Finally I could move the drive from the external enclosure to the internal (single) SATA port, as well as leverage the Intel Quick Sync Video CPU acceleration for my Plex server.

While I was fine with spinning platters for my media, I wanted something solid-state for my root partition. As such, I opted for a high speed USB 3.0 memory stick to host it: a small 64GB SanDisk Ultra Fit that would unobtrusively sit in the NUC’s external port.

Initially, it worked great: it was fast, and speedy. Unfortunately, with time it seems that the performance started suffering. SSHing into the machine took time, and apt-get upgrade operations were slow as a dog right after a nap.

Diagnosing IOPS

Having previously experienced degrading random IO performance of USB and SD cards, I decided to figure out whether it was the root cause. This How to benchmark disk I/O proved a great resource, pointing to the fio tool.

Here is the command line that allocates a 1GB file and performs a 75/25% random read/write benchmark:

sudo fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=test --filename=test --bs=4k --iodepth=64 --size=1G --readwrite=randrw --rwmixread=75

The results were… catastrophic. Here are the truncated stats for the SATA HDD:

read: IOPS=149, BW=599KiB/s (613kB/s)(768MiB/1312826msec)

write: IOPS=50, BW=200KiB/s (205kB/s)(256MiB/1312826msec); 0 zone resets

And here they are for the USB 3.0 stick that hosted the root partition:

read: IOPS=79, BW=317KiB/s (325kB/s)(768MiB/2477513msec)

write: IOPS=26, BW=106KiB/s (109kB/s)(256MiB/2477513msec); 0 zone resets

That’s… miniscule. For comparison, here are the stats from an SSD in my laptop running a 8GB drive:

read: IOPS=58.0k, BW=230MiB/s (241MB/s)(6141MiB/26667msec)

write: IOPS=19.7k, BW=76.9MiB/s (80.6MB/s)(2051MiB/26667msec); 0 zone resets

Yup… that’s 20000 vs 20 write operations per second. That 1000x gap needed fixing.

Finding the right SSD

Turns out the NUc has a M.2 slot that currently has an Intel® Dual Band Wireless-AC 3168 Wifi+Bluetooth inside of it. It should be straight-forward as going to Amazon and buying a mini PCIe SSD, right?

Wrong.

Demistifying M.2/PCIe and mSATA

If you search Amazon for mini PCIe SSD you have all sorts of devices, of varying length and varying pin layouts. This is a minefield caused by the adoption of similar connectors for very different purposes.

The old mini PCI Express connector has been reused for SSD drives speaking SATA protocol. That’s why you’ll see a lot of 30 mm x 50.95 mm mSATA SSDs around, targeted at the older laptops.

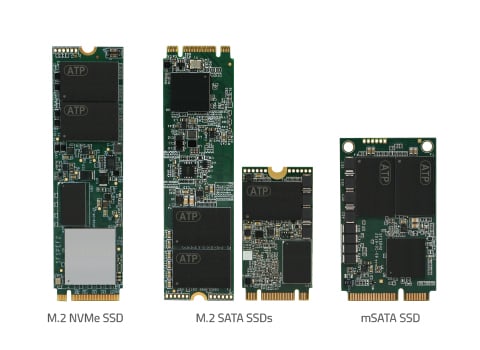

All the possible form factors of SSDs you’ll fine. Only the NVME one is PCI express.

You’d think that moving to a new M.2 connector would have solved the problem. Unfortunately, the idea of putting SATA into PCIe connectors remained. To understand the situation better, read this excellent Differences Between an M.2 and mSATA SSD (credits for image above).

The M.2 connector (22mm in width) was introduced around PCI Express 2.0, and as you’re probably now understand, it has multiple variants for different purposes. The ArsTechnica article has an excellent table summary:

| Type | Length (mm) | Protocols | COMMON USES |

|---|---|---|---|

| A | 1630, 2230, 3030 | PCIe x2, USB 2.0, I2C, DisplayPort x4 | Wi-Fi/Bluetooth, cellular cards |

| B | 3042, 2230, 2242, 2260, 2280, 22110 | PCIe x2, SATA, USB 2.0, USB 3.0, audio, PCM, IUM, SSIC, I2C | SATA and PCIe x2 SSDs |

| E | 1630, 2230, 3030 | PCIe x2, USB 2.0, I2C, SDIO, UART, PCM | Wi-Fi/Bluetooth, cellular cards |

| M | 2242, 2260, 2280, 22110 | PCIe x4, SATA | PCIe x4 SSDs |

So, there’s not only PCIe 2.0 vs PCIe 3.0, but also M.2 socket type A, type B, type M and type E. 🤯

What does the NUC have?

At this point I decided to do some digging. It is clear that I need a non-SATA, NVME SSD. Most of the ones sold now are PCIe 3.0 with 4 or 2 lanes, and have an M.2 type-M socket. The question was: what does the NUC have.

Thank god for the A and E markings on the Wireless card to know which M.2 socket it uses.

Looking at the Wireless card and its spec it seems it’s a 22x2230mm M.2 card of type-A and type-E simultaneously.

However, it is unclear what kind of PCI express interface and lane count it supports. For that, we’ll use the following lspci advise:

$ sudo lspci -vv | grep -E 'PCI bridge|LnkCap'

00:13.0 PCI bridge: Intel Corporation Celeron N3350/Pentium N4200/Atom E3900 Series PCI Express Port A #1 (rev fb) (prog-if 00 [Normal decode])

LnkCap: Port #3, Speed 5GT/s, Width x1, ASPM not supported

00:13.1 PCI bridge: Intel Corporation Celeron N3350/Pentium N4200/Atom E3900 Series PCI Express Port A #2 (rev fb) (prog-if 00 [Normal decode])

LnkCap: Port #4, Speed 5GT/s, Width x1, ASPM not supported

00:13.2 PCI bridge: Intel Corporation Celeron N3350/Pentium N4200/Atom E3900 Series PCI Express Port A #3 (rev fb) (prog-if 00 [Normal decode])

LnkCap: Port #5, Speed 5GT/s, Width x1, ASPM not supported

The Speed 5GT/s corresponds to PCIe 2.0 and Width x1 means a 1x lane. That’s ok, as based on the spec information PCIe is backwards compatible both on version and lane count. Things will be slower, but should work.

Buying an SSD and a converter

Since the NUC is tiny (board is 10cmx10cm), it was unlikely to fit the most commonly available 2280 (80mm) SSDs inside. Moreover, most SSDs available at the market come with a type-M connector.

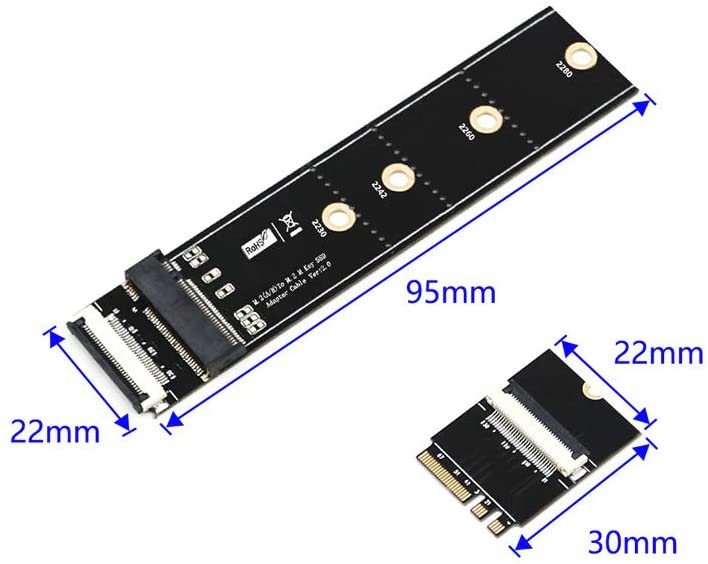

First, we’d need to find something that would re-pin type-M PCIe to type-A+E. Turns out, that even during the peak of Coronavirus, Shenzhen is churning out all the possible variations of electronics. Converters like this are available on eBay and AliExpress… but I managed to get mine within 3 weeks after ordering it on Amazon:

Type-M 2230-2280 on one side, Type-A+E 2230. Exactly what I needed.

In terms of the actual SSD, there’s only a limited number of NVME ones that are less than 80mm wide. As speed is not my purchase criteria (it’ll work over PCIe 2.0 x1 anyway), I went with a little known SCY branded 2242 device:

Really don’t need 256GB, but it seems nobody is selling smaller ones.

Putting it all together

The physical assembly proved quite tricky. Due to the tiny sizes (2cm in width) the devices and NUC internals feel extremely fragile. Opening the NUC is easy:

You can see how tricky placing everything in the small NUC will be. Also note the Type-M on the SSD vs the Type-A+E on the connector.

Replacing the Wirless module with the converter was relatively easy, except for uncliping the antenna connectors which required using tweezers:

The NVME SSD is connected and reading for testing

At this point I decided to see whether the thing works. Booting up to the BIOS showed a new device ID in the PCIe port, but without a name. Booting into Linux (with the root partition still on the USB device) showed things were looking good:

$sudo lspci -v

(…)

02:00.0 Non-Volatile memory controller: Silicon Motion, Inc. Device 2263 (rev 03) (prog-if 02 [NVM Express])

Subsystem: Silicon Motion, Inc. Device 2263

(…)

Kernel driver in use: nvme

Kernel modules: nvme

#greatsuccess. The device is visible and loaded. Let’s see what’s there:

$ sudo fdisk -l

(…)

Disk /dev/nvme0n1: 238.49 GiB, 256060514304 bytes, 500118192 sectors

Disk model: P20C

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

(…)

Yup, NVME devices are visible as /dev/nvmeX. Everything seems to be working just fine :).

However, the case still hasn’t been closed. To do that I needed to switch to a shorter cable, and cut short the SSD-side of the board to match the 2242 width.

Closing was a little bit tight, but bluetac helps to keep things in place.

Closing

After creating a temporary partition, I run the same fio benchmark as above. This time with a 8GB file (similar to the laptop SSD):

read: IOPS=42.9k, BW=168MiB/s (176MB/s)(6141MiB/36639msec)

write: IOPS=14.3k, BW=55.0MiB/s (58.7MB/s)(2051MiB/36639msec); 0 zone resets

That’s 15000 writes/second, in the same ballpark as the 19000 writes/second of the laptop SSD and waaaay more than the 20 writes/second of the USB stick or 50 writes/second of the HDD. Random reads and writes have improved 600x. Filesystem operations are now blazingly fast, and the Plex media library loads in an instant.

While “duh, putting an SSD makes it faster” is a well known fact, and not much to write about, squeezing one into the small body of an Intel NUC that wasn’t meant to have one was a fun project. I now have an extremely capable tiny media server (12cmx12cm) with a 256GB SSD and a 2TB HDD.

If you want to read as to how to move a modern Ubuntu install from one drive to another, another blog post is coming.